Image credit: Pexels

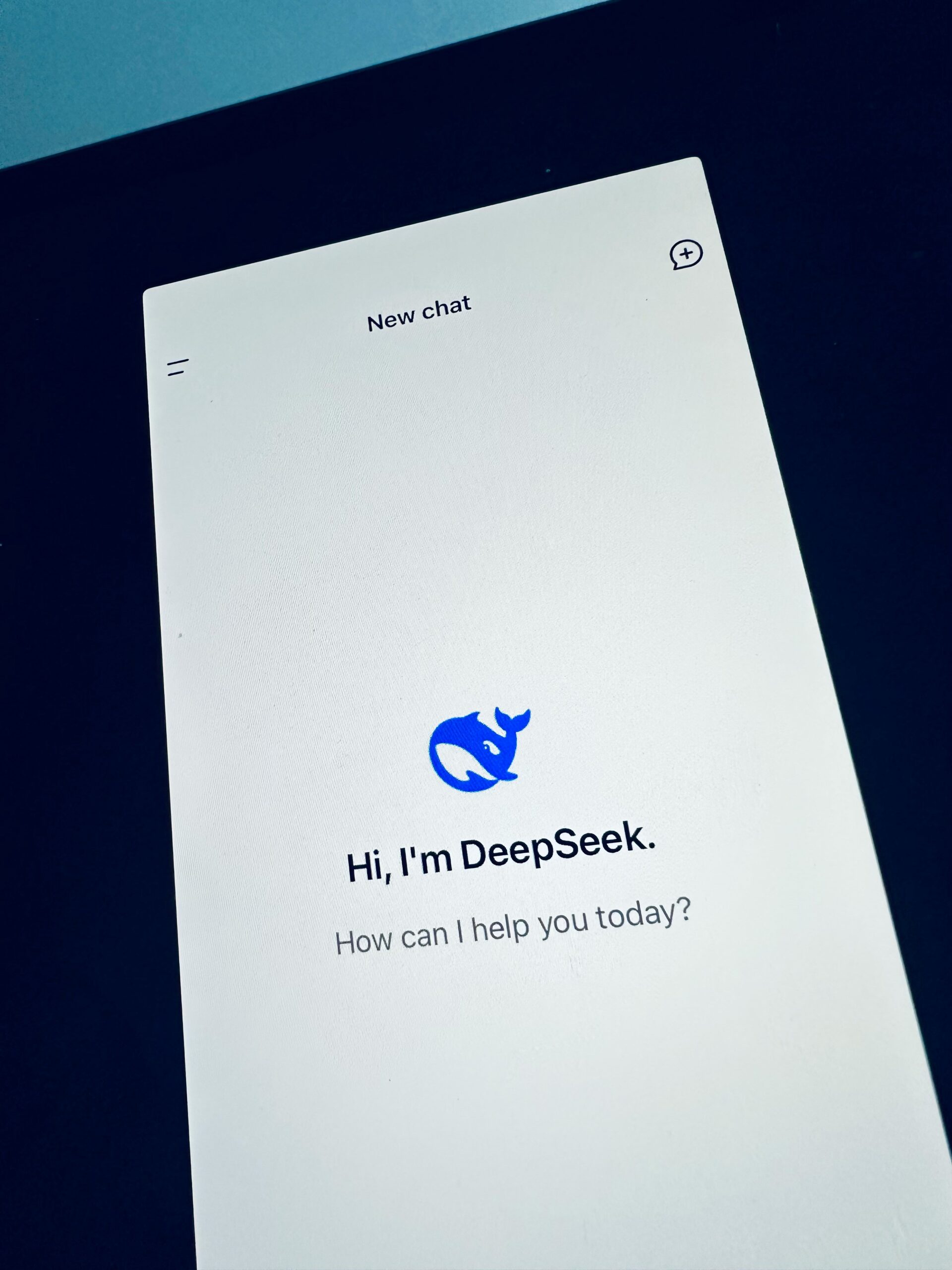

With time, artificial intelligence is becoming more integrated into daily operations. In this scenario, a parallel trend is now coming to the spotlight within enterprises. It is the growing popularity of shadow AI. This phenomenon involves employees independently adopting unauthorized AI tools, such as ChatGPT or Copilot, across departments, including marketing, engineering, and operations.

While these shadow AI tools enhance efficiency and innovation, their unsanctioned use also exposes organizations to significant threats, ranging from data breaches to regulatory non-compliance and even the inadvertent loss of intellectual property.

Growing Risk of Shadow AI in the Enterprise

The increasing acceptance of shadow AI has made data governance a top concern among business leaders. Recent findings have revealed that data governance ranks as a top challenge for 49% of AI practitioners and 40% of those in leadership.

Once data is allowed to leak among unmonitored channels, where employees use unchecked AI tools, sensitive information can be compromised. This acts against corporate policies by infringing on confidentiality agreements for both proprietary and customer data. It can also lead to regulatory actions and have the potential to harm a company’s reputation.

Unmonitored use of AI tools can also affect the efficacy of established cybersecurity frameworks. AI tools that IT departments do not vet may turn the existing system vulnerable to cyber threats, making visibility and data flow control difficult for security teams to manage. The decentralized nature of these shadow AI tools further complicates traditional governance models. The solution here is a more dynamic approach to risk management.

Best Practices for Controlling Shadow AI Without Stifling Innovation

Despite the vulnerabilities, organizations cannot entirely prohibit the use of shadow AI tools. It can stifle the innovation that these tools enable. Organizations adopting futuristic models are seeking more balanced solutions that allow employees to harness the benefits of these AI tools while minimizing their exposure to risks.

A key strategy here is to educate employees about the vulnerabilities of shadow AI, enabling them to evaluate both the capabilities and limitations of AI tools while assessing the data risks associated with their use.

Establishing comprehensive AI usage policies provides a clear framework for acceptable practices. These policies, combined with robust monitoring of network traffic, help identify unauthorized usage early. Simultaneously, enterprises are investing in secure, internal alternatives, such as private GPT instances, which offer employees the same convenience while maintaining corporate oversight and control.

Encouraging innovation within governed environments fosters responsible AI adoption. When departments are empowered to experiment under controlled conditions, organizations can safely leverage AI-driven efficiencies without compromising their compliance obligations.

A New Approach to Enterprise AI Governance

Modern enterprise governance models emphasize agility over rigid control. As Srujan Akula, CEO of The Modern Data Company, explains, “One of the key aspects of being able to do this at scale is providing the control to your InfoSec teams so that they understand what is happening with the data.”

This perspective highlights the importance of real-time visibility into AI tool usage.

Unified governance frameworks now integrate metadata management, observability, and audit logging to create transparency across systems. Rather than confining data to static silos, dynamic governance applies controls at the point of data consumption. This allows enterprises to monitor usage patterns and enforce policies flexibly, aligning security requirements with business needs.

Final Thoughts

Shadow AI presents enterprises with a complex balancing act. While the risks are real, dismissing these tools outright ignores their transformative potential. By combining infrastructure controls with comprehensive employee education, organizations can mitigate dangers while unlocking new levels of productivity.

Solutions such as governed feature stores, internal AI models, and sophisticated metadata strategies offer a scalable path forward, ensuring that innovation thrives under the watchful eye of robust governance.