Image credit: Pexels

The influence of artificial intelligence in modern workplaces has moved beyond productivity metrics and operational efficiency. AI has been integrated into decision-making, performance evaluation, and communication, but this increased adoption is changing the definition of trust between leadership and the workforce. The benefits of technology are obvious, but the dangers are equally significant. Amid this, the pressing question is: What steps should be taken to preserve the confidence of the workforce in their leadership while organizations benefit from AI-enabled infrastructure?

AI and the New Trust Equation

AI is now efficiently handling more analytical and evaluative responsibilities. This shift has strained the relationship between leaders and their teams as employees question how decisions are made, which data sources are prioritized, and whether algorithmic judgment reinforces or undermines fairness. Peter Whealy, Founder of Elevate Potential, argues that this moment represents a structural inflection point for leadership rather than a tooling upgrade.

Whealy describes a growing mismatch between the speed of AI-enabled decision-making and the slower human processes required to absorb and contextualize those decisions. The resulting gap often leaves employees feeling excluded from outcomes they are expected to trust. Leadership credibility, he notes, no longer comes from being the final authority on information, but from demonstrating how decisions are interrogated, validated, and stress-tested before action.

Rather than acting as passive adopters of automation, Whealy believes leaders must become what he calls “trust stewards.” He points to a framework gaining traction in executive circles:

“Trust = (Credibility + Reliability + Intimacy) / Self-orientation.”

The equation highlights a fragile balance. Credibility and reliability reflect competence and consistency, while intimacy captures empathy and psychological safety. Self-orientation, placed deliberately in the denominator, warns against decisions driven primarily by margin, speed, or control.

Additional tension emerges as AI challenges traditional authority structures. Whealy observes that leaders are no longer valued primarily for possessing knowledge, but for asking better questions of increasingly complex systems.

“My credibility as a leader is no longer about verification of the truth,” Whealy explains. “AI can process more data than I ever could. The responsibility now is to challenge outputs, surface risks, and help teams understand what the data does not say.”

This reframing places trust-building squarely in the realm of judgment, humility, and transparency. Leadership, in this context, becomes less about certainty and more about creating psychological safety around uncertainty as AI accelerates organizational change.

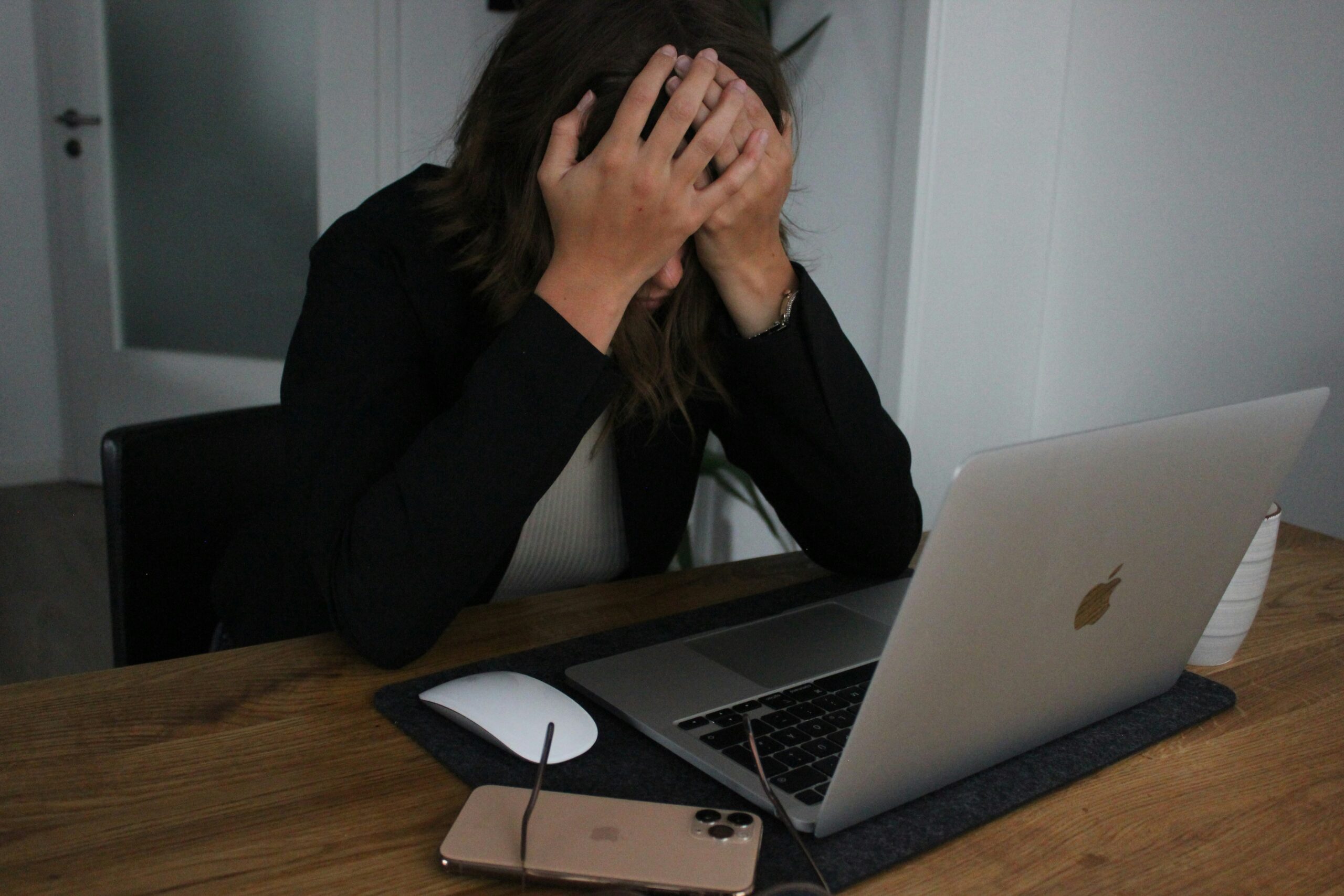

When Insight Turns Into Surveillance

AI’s ability to surface objective insights into performance has made it appealing to leadership teams seeking accountability. However, the distinction between measurement and monitoring often collapses during implementation. Ed Brzychcy, Founder and President of Lead from the Front, observes that many organizations introduce AI oversight tools precisely when trust is already fragile.

Brzychcy notes that surveillance-oriented systems frequently address symptoms rather than causes. Missed deadlines, inconsistent output, or disengagement are treated as visibility problems instead of signals of training gaps, unclear expectations, or misaligned incentives. The result is a reinforcing loop where increased monitoring further erodes morale.

“People are demoralized when they feel the machine sitting over their shoulder. It’s just micromanagement by machine,” Brzychcy explains.

Deeper cultural consequences often follow. Brzychcy warns that surveillance tools unintentionally train employees to do the minimum required to avoid scrutiny rather than encouraging initiative or ownership. “When people feel watched instead of supported, effort becomes compliance. The motivation shifts from contribution to self-protection,” he says.

Brzychcy emphasizes that organizations achieving healthier adoption focus on outputs and collaboration rather than time-to-task metrics. AI becomes a diagnostic instrument, revealing where processes break down, rather than a behavioral enforcer designed to correct individuals. In environments where mentorship, feedback, and development are already strong, AI tends to amplify trust rather than undermine it.

AI as an Empowering Force Multiplier

While concerns about misuse persist, some organizations are demonstrating how AI can increase transparency rather than diminish it. Amelia Wampler, Co-Founder of Formata, describes AI as a connective layer that reconciles fragmented operational and customer data, allowing teams to work from a shared source of truth.

Wampler emphasizes that trust hinges on who has access to insight. Systems designed exclusively for executive dashboards risk reinforcing power asymmetries, while inclusive data access encourages alignment. “We believe that everyone can benefit from that data,” she says. “It may just be surfaced in different ways to be most helpful for some people.”

Beyond access, Wampler highlights AI’s role as an objective intermediary. Pattern recognition across conversations, meeting transcripts, and customer feedback allows teams to move beyond anecdotal decision-making. “It becomes an objective third party. The data can say, ‘This is what your customers are actually talking about,’ even when that’s uncomfortable,” she explains.

This neutrality, according to Wampler, often improves accountability without introducing fear. Conversations shift from defensiveness to curiosity when insights are framed as shared signals rather than performance judgments. AI recommendations, she adds, should function as discussion starters rather than directives, reinforcing collaboration instead of hierarchy.

Culture as the Deciding Factor

Ultimately, AI’s impact on trust depends less on technical capability than on organizational culture. Heather Page, Chief of Staff at TribalScale, stresses that no system can compensate for leadership behavior that lacks transparency or respect for employees.

Page observes that job candidates and employees already experience hiring and performance systems as opaque. AI, when deployed thoughtfully, offers an opportunity to reverse that pattern by delivering clearer feedback and more consistent evaluation.

“No technology is going to fix a bad culture,” Page says. “But if you use it respectfully of your end user, then it can be effective.”

One of the most meaningful shifts Page describes involves feedback itself. AI-assisted synthesis allows organizations to provide candidates and employees with concrete insight that was previously sacrificed due to time constraints. “Something that would have taken thirty minutes per person can now be done in five. That changes whether feedback exists at all,” Page notes.

This shift carries symbolic weight. Transparency becomes experiential rather than aspirational when people receive explanations instead of silence. Page cautions, however, that trust evaporates quickly when AI-generated insights are weaponized or detached from human accountability. Respectful implementation, she argues, requires restraint, clarity of intent, and leaders willing to stand behind decisions rather than hiding behind systems.

Leadership Is Imperative

AI is no longer a future consideration but a present force reshaping daily work and organizational relationships. As intelligent systems assume greater analytic responsibility, leadership accountability becomes more visible rather than less. Trust is no longer built through authority alone, but through clarity, restraint, and demonstrated intent.

Organizations that treat AI as a collaborative ally that augments judgment, surfaces shared understanding, and supports human development are more likely to sustain confidence within their workforce. Those who deploy it as a control mechanism risk accelerating disengagement in the very moment when trust matters most.