Image credit: Pexels

Agentic AI is transforming how businesses operate by accelerating workflows and automating tasks once handled by human employees. This drastic shift has showcased precision and speed in the workflow, but comes with an emerging set of risks that many organizations have only just begun to realize. Companies are now struggling to mitigate risks such as identity spoofing, unauthorized system access, and insufficient oversight mechanisms. These issues have currently surged to a scale that didn’t exist even a year ago.

The primary concern behind the rising reliance on agentic AI is the potential for AI agents to log in as humans and perform actions on behalf of employees and customers, as no reliable system exists to distinguish the two. This blurring of identities is forcing leaders to confront a new security reality.

The New Threat Model: AI Agents With Human Credentials

Traditional login systems, built around passwords and basic multi-factor checks, assume that a human is sitting on the other side. In an AI-powered environment, that assumption no longer holds. Agentic AI can authenticate with saved credentials, navigate systems independently, and execute tasks without human intervention.

This capability introduces risks far beyond those posed by ordinary phishing attempts. Unauthorized data extraction, misrepresentation in legal or financial processes, and deepening exposure to cyberattacks are now part of the threat landscape.

Peter Horadan, CEO of Vouched, warns, “We cannot have a world where you can’t tell the difference between the AI and the human.”

Strong concerns emerge from Horadan’s real world observations that non-human actors are already penetrating corporate systems at a pace most leaders underestimate. He explains that companies deploying his detection tool witness “tens or hundreds of events per day” where AI attempts to log in with human credentials. That volume reframes the issue from a theoretical risk to an active security challenge that organizations must acknowledge. An unmonitored agent can easily delete user data, place unauthorized orders, or retrieve internal information that later becomes legally discoverable.

Urgency intensifies when Horadan expands the conversation toward regulation. He raises questions that have no precedent in current law, asking whether interactions with AI that mimic professional roles, such as legal or medical advisors, should be treated as privileged communication. He believes standards bodies are only beginning to understand the implications and predicts that the next year will force decisive action. “Anybody with a login button is going to discover non-human actors showing up with their users’ usernames and passwords,” he says, emphasizing how universal the shift will be.

Trust, Permissions, and Guardrails

To grow sustainably, companies must invest in systems that manage transparency and control, not just speed or automation. Movadex argues that AI autonomy is only responsible when users can clearly understand and manage what an agent is doing. This includes visible activity logs, undo options, and explicit opt-ins for actions that alter data or processes.

Co-Founder Salome Mikadze captures the issue succinctly: “Trust is a feature, not an afterthought.”

A more complete understanding of Mikadze’s view shows that she interprets trust as a measurable relationship, not an abstract ideal. Her trust model draws on the pillars of competence, alignment, predictability, and accountability, which she describes as the core signals that determine whether users feel safe delegating decisions. “Trust is always our willingness to delegate our own cognitive or operational task to an AI system,” she explains. Each pillar influences that willingness in a distinct way. Her framework turns trust into a practical design requirement that companies must quantify and maintain.

Mikadze’s work also highlights the consequences of misalignment in autonomous systems. Enterprises face pressure to automate high-volume tasks, yet the speed of that automation can obscure hidden risks. “If there has been a mistake, no critical harm can occur and you can easily undo,” Mikadze says. Her point is that autonomy must always be paired with reversibility, transparency, and clearly communicated fallbacks. A system that cannot explain itself or allow humans to intervene is a system that does not deserve the trust it seeks.

Controlled Autonomy: Building AI With Guardrails

Some organizations are addressing these concerns by redesigning AI systems to operate within strict boundaries. Tonkean’s approach, known as deterministic autonomy, ensures agents perform only within predefined limits. This model avoids the unpredictability often associated with large-scale automation and prevents agents from taking actions beyond what organizations can monitor or reverse.

Rory O’Brien, VP of agents, emphasizes the principle behind this structure: “You can’t give an agent what you’re not even giving humans.”

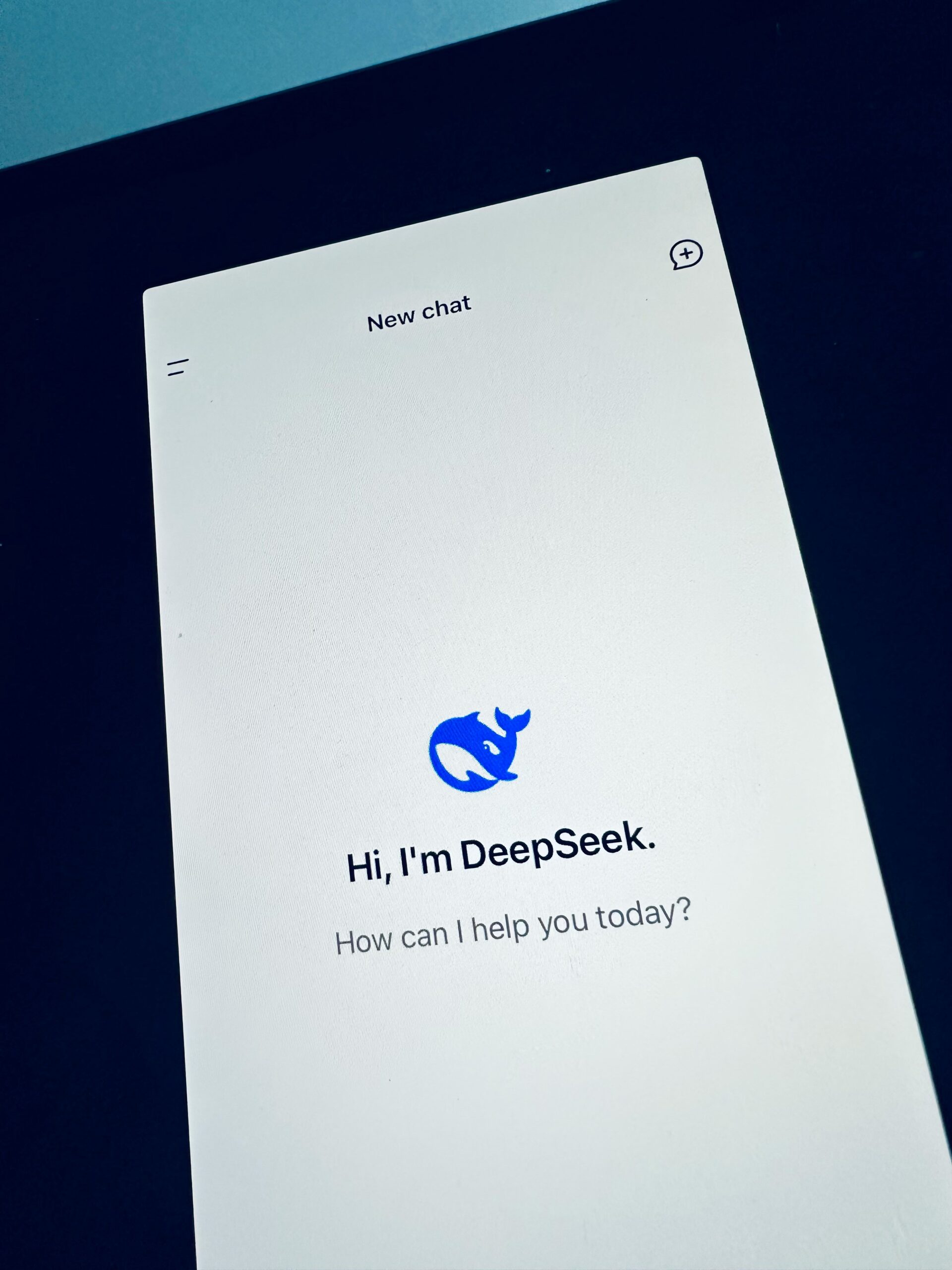

Organizations may unknowingly recreate old automation problems under a new AI banner. O’Brien describes the emergence of “AI slop,” within the context of employees across departments building agents and automations without oversight in the same way people once created unregulated Zapier or Power Automate workflows. The result is an environment where undocumented agents proliferate, making it difficult to track ownership, understand dependencies, or predict system behavior when employees leave or when underlying platforms change.

Tonkean’s decade of infrastructure work influences their philosophy on autonomy. O’Brien explains that the platform has always been designed to abstract data sources and integrate cleanly with IT governance. Permissions are not an afterthought for Tonkean but a layered structure that cascades from service accounts to system level access and finally to agent level permissions. “Agents are only as effective as the access you give them,” O’Brien stresses, further illustrating that responsible autonomy begins with rigorous system design rather than clever prompting.

Training Before Transformation

Even with the proper guardrails, many companies stumble early by skipping foundational education. C4 Technical Services and Bodhi AI report that up to 95% of AI adoption efforts fail due to inadequate training. Businesses often deploy AI systems without ensuring that teams understand how these agents learn, what data they access, or the risks associated with their use.

Zac Engler, Chief AI Officer at C4, highlights the blind spot: “If you don’t know what the AI is learning from you, you don’t know what you’re giving away.”

For organizations handling sensitive information, that lack of visibility becomes an immediate liability.

Engler warns further about shadow AI. He observes that blanket bans rarely succeed because employees simply turn to unapproved tools that offer immediate convenience. He recounts how workers secretly generate presentations using consumer AI apps because the alternative requires hours of manual effort. “Everyone knows you can spend four hours building it or hop over to Gamma and have it done in fifteen seconds,” he says, showing how poor policy creates quiet fragmentation across an organization’s workflows.

Greater depth surfaces through his view that autonomy requires a clear understanding of internal operations. Engler argues that companies cannot responsibly deploy autonomous agents until they understand their own processes with precision. He recommends full process mapping and screen recordings to document every step of a workflow. “Worst case scenario, you have a better understanding of what’s going on. Best case scenario, you can map out exactly where AI makes sense and where you should keep a human in the loop.” Engler explains, emphasizing that clarity should be treated as a prerequisite for autonomy rather than a byproduct.

Final Thoughts: A Shift Businesses Can’t Ignore

Agentic AI is being adopted at a faster rate than ever across industries, and this pace is still increasing. Firms need to embrace multi-tiered permission models, deploy identity systems that can distinguish between human users and AI agents, and make trust and transparency the basis for every interaction to be in the lead.

Those who are early in preparing will reduce risk and have greater control over the situation, while AI systems are deeply embedded in day-to-day activities. The others might find themselves responding to unintended consequences they could have easily avoided.